BERT

How does BERT help in SEO?

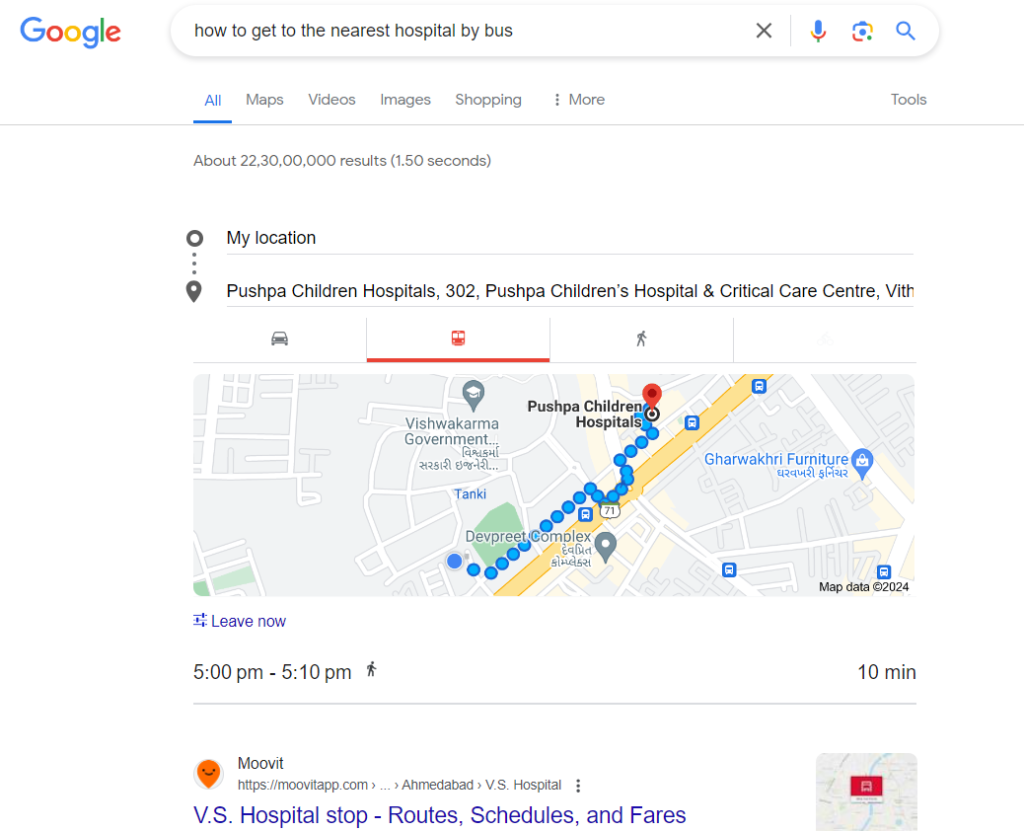

Consider a user searching for the query “how to get to the nearest hospital by bus”

Before BERT: Before BERT, the search engine might have focused primarily on the keywords “hospital,” “bus,” and “nearest.” However, without understanding the context, it might have returned results related to bus schedules or nearby bus stops, rather than providing directions to the hospital by bus.

After BERT, the search engine can understand the complex nuances of the query, including the user’s intent to travel to the hospital using public transportation. As a result, it can deliver more accurate and relevant search results, such as directions to the nearest hospital accessible by bus routes.

In this example, BERT helps improve the search experience by ensuring that the search engine understands the user’s intent more accurately, leading to better search results and ultimately enhancing the effectiveness of SEO efforts.